Why AI‑generated research needs an audit trail

AI models now draft everything from literature reviews to entire manuscripts, and in 2025 that’s normal in many labs. The problem is that large language models generate *plausible* text, not guaranteed truth. They can invent citations, subtly distort statistical claims and gloss over methodological caveats. If you publish or rely on that output without a systematic check, you risk propagating errors into meta‑analyses, policy documents and clinical guidelines. Auditing is therefore less about distrusting AI and more about imposing a verification layer: tracing claims back to primary sources, validating numbers, measuring bias and documenting what has actually been checked before the text enters the scholarly record.

Core principles of auditing AI outputs

Before diving into tools, it helps to fix a mental model of what “reliability” means. Auditing AI‑generated research outputs combines four checks: factual accuracy, source integrity, methodological coherence and ethical compliance. The workflow should mimic a tough peer reviewer: every quantitative result is re‑computed, every reference is confirmed, and every normative claim is inspected for hidden assumptions. Instead of asking whether the AI text “sounds right”, the question becomes: can each statement be grounded in verifiable evidence, and is there a transparent trail showing who (human or AI) did what, with which dataset, at which step?

Necessary tools for AI research auditing

You don’t need a massive infrastructure to start, but you do need a curated toolbox. At minimum, combine a citation index (Scopus, Web of Science, Google Scholar) with domain databases like PubMed, arXiv or SSRN. On top of that, introduce AI content detection tools for research papers to flag segments that are likely machine‑generated, not to punish them, but to prioritise them for deeper review. For integrity checks, use reference managers, version control (Git, DVC) and a structured note‑taking system (Obsidian, Notion) to track what the AI produced, what you kept, and what you corrected during the audit process.

Specialised software stack and services

Since 2023, a whole ecosystem of AI‑aware quality checkers has appeared. Some of the best AI audit software for scientific articles integrate directly with manuscript editors and perform layered checks: citation validity, statistical consistency, journal guideline compliance and language model usage disclosure. Complement them with tools to check reliability of AI generated content that benchmark claims against curated knowledge graphs or domain‑specific corpora. Finally, plug in AI plagiarism and fact checking services for researchers that can simultaneously scan for text reuse, paraphrased overlap, fabricated references and mismatches between cited and actual article content.

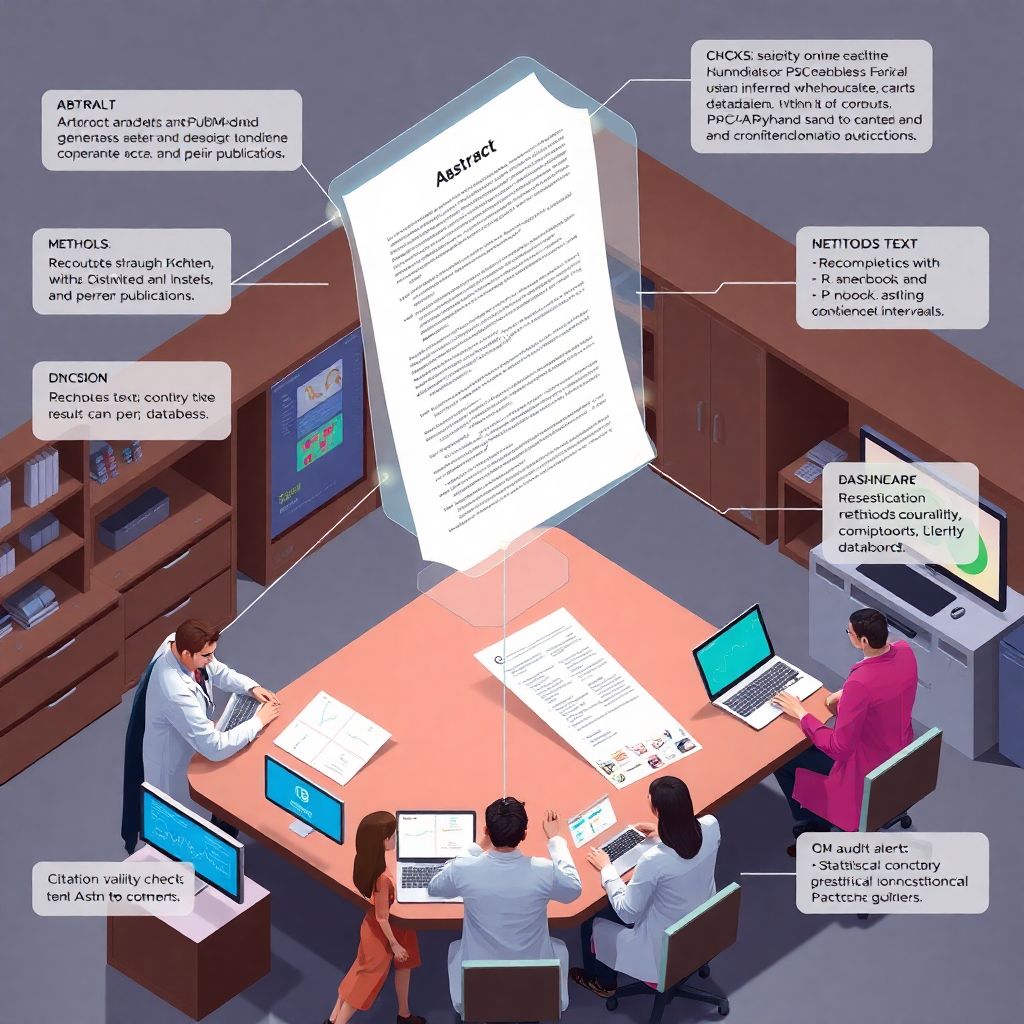

Step‑by‑step process: from raw AI draft to audit‑ready text

Think of the audit as a pipeline. You start with the AI draft, then iteratively harden it. First, freeze the exact prompt and model configuration so you can reproduce the output later; store both alongside the text in your version control system. Next, segment the document into units that can be checked: abstract, background, methods, results, discussion. For each block, mark which sentences are original, which are AI‑assisted and which derive directly from prior publications. This segmentation becomes the backbone of your audit trail and will later help reviewers understand the provenance of each claim.

Checking citations, references and claims

Citation hallucinations are still common, even in 2025. To address how to verify AI generated research for accuracy, start by extracting every cited work and every in‑text numerical claim into a separate checklist. For each reference, query trusted indices and download the original paper or dataset. Confirm that authors, title, journal, year and DOI all match, and that the source actually makes the claim you’re attributing to it. When the AI cites a nonexistent paper, either replace it with a real, substantively equivalent study or remove the statement entirely. Log each fix so later readers can see what changed and why.

Auditing methods, statistics and data

AI systems often describe “typical” methods that sound right but don’t align with your actual dataset or research design. During audit, compare every methodological statement against your study protocol, preregistration or lab notebook. Check sample size, inclusion criteria, model architecture, hyperparameters and evaluation metrics. For quantitative work, re‑run core analyses in a scripting language (R, Python, Julia) and verify that reported p‑values, confidence intervals and effect sizes match what the AI wrote. If there’s any discrepancy, treat the human‑recomputed result as canonical and annotate the text to reflect the corrected values and the reasoning behind changes.

Human‑in‑the‑loop review workflow

Even sophisticated software cannot replace a subject‑matter expert. Build a review loop that explicitly assigns responsibilities: who checks theory, who checks stats, who verifies sources. For multidisciplinary work, have at least one domain expert and one methods expert read the AI‑influenced sections line by line. Encourage them to flag statements that are vague, overgeneralised or suspiciously polished. Use inline comments and issue trackers rather than email so that discussions about contentious claims are preserved. This human‑in‑the‑loop design turns the AI into a drafting assistant, while the scientific judgement remains squarely in human hands.

Practical checklist for everyday use

To keep audits pragmatic, many teams encode them as short repeatable checklists. A typical pass might include:

– Extract and verify all references; remove or replace any hallucinated citations.

– Recompute all statistics, tables and key figures from raw data or code.

– Confirm that the described methods match actual experimental or computational workflows.

– Scan for overconfident language that overstates evidence strength or generalisability.

– Ensure that all AI assistance is disclosed in the methods or acknowledgements section.

Using automated detectors without overtrusting them

AI detectors are noisy—false positives hit non‑native writers, and false negatives slip past with lightly edited machine text. Use detectors as triage, not as judges. Run AI content detection tools for research papers on drafts to highlight sections that are likely AI‑heavy; that is where factual hallucinations and generic phrasing tend to cluster. Combine output from multiple detectors and treat disagreements as a signal to read more carefully. When a detector flags a passage, you don’t delete it automatically; you simply elevate it for rigorous cross‑checking against trusted sources and your own analytical code.

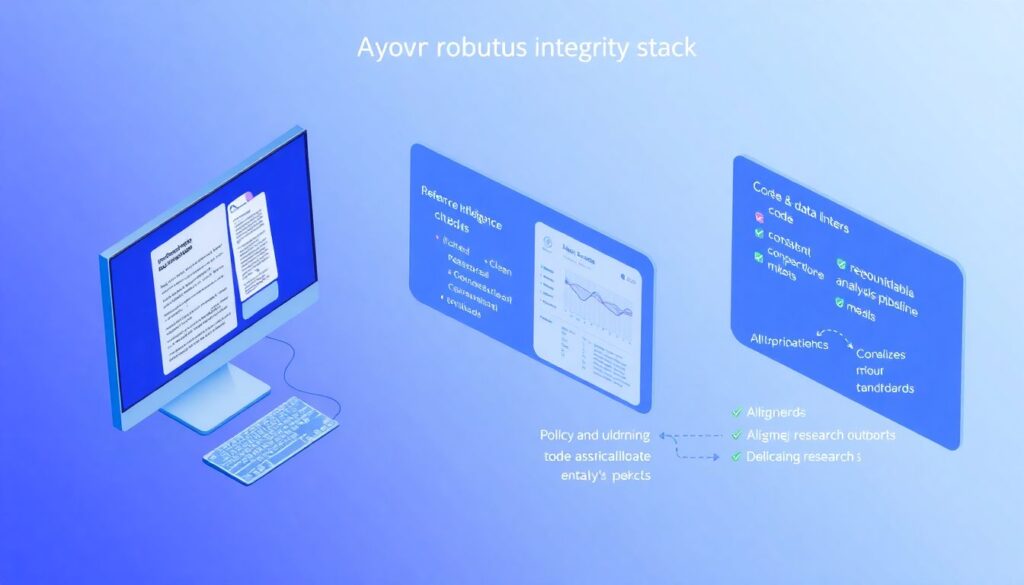

Additional tool categories worth integrating

Beyond detectors, a robust stack usually contains:

– Reference intelligence tools that auto‑download PDFs and compare cited passages with your paraphrases.

– Code and data linters that ensure reproducibility of analyses and consistency of reported metrics.

– Policy and guideline checkers that align manuscripts with CONSORT, PRISMA, ARRIVE or other domain standards.

– Collaborative platforms that track edits, comments and approvals specifically for AI‑edited segments, making future audits far less painful.

Troubleshooting common audit problems

You will run into recurring failure modes. One is the “everything looks reasonable” illusion: text is stylistically impeccable but thin on precise, checkable statements. Counter this by forcing the draft to include concrete effect sizes, parameter values and protocol identifiers that you can verify. Another issue is reference clusters where several papers from the same author, year and topic look suspicious; these often hide hallucinations. When you cannot locate a cited work, assume it is fabricated until proven otherwise. Replace it with a validated source or rewrite the claim so it no longer depends on that citation.

When tools disagree or give weak signals

Sometimes multiple tools to check reliability of AI generated content give conflicting outputs, or detectors mark nearly the entire document as “AI‑like” because the writing is simply clear and structured. In such cases, deprioritise the AI/no‑AI question and refocus on verifiability: are all numbers traceable to computations, all claims traceable to sources, all methods traceable to lab records? If yes, the provenance matters less. When signals remain ambiguous, document the uncertainty in an internal note and keep raw drafts, prompts and intermediate versions so future auditors or journal editors can reconstruct the pathway if needed.

Governance, documentation and team policies

Individual audits are easier when your lab or organisation has clear policies. Define which stages of the research lifecycle may use generative AI: exploratory brainstorming, text polishing, code scaffolding, but not raw data fabrication or analytic cherry‑picking. Mandate that every manuscript includes an AI usage statement, plus a short summary of the audit steps applied. Over time, these micro‑policies accumulate into a governance framework that journals and funders recognise. By 2025, many grant agencies already expect explicit assurances that AI outputs were audited, not blindly trusted, especially for work informing health, safety or environmental decisions.

Where this is heading: 2025–2030 outlook

Over the next five years, auditing AI‑generated research will move from a niche concern to a standard part of scholarly infrastructure. Expect to see integrated environments where the best AI audit software for scientific articles connects directly to lab notebooks, code repositories and data warehouses, automatically cross‑linking every sentence to the evidence that supports it. Journals will increasingly require machine‑readable audit logs alongside manuscripts. At the same time, AI systems themselves will become better at citing, but also more deeply embedded in analysis pipelines, making independent AI plagiarism and fact checking services for researchers even more critical to maintain scientific trust.

Building a sustainable AI‑assisted research culture

Auditing is not about rejecting AI; it is about embedding it safely into the scientific method. Teams that flourish in this 2025 landscape will treat models as powerful apprentices whose work must always be double‑checked. That means budgeting time for audits, training early‑career researchers in verification techniques and normalising conversations about where AI helped or hindered a project. As tools mature and standards coalesce, the researchers who maintain rigorous audit practices will be the ones whose AI‑assisted publications remain credible, reproducible and citable long after the current wave of automation has become ordinary background infrastructure.