Why crypto firms need a different kind of analytics platform

Most crypto companies start with spreadsheets, block explorers and a couple of APIs, then suddenly discover they’re expected to operate like banks: instant investigations, audit‑ready reports, zero‑tolerance to mistakes. At this point a modular crypto analytics platform for enterprises stops being “nice to have” and turns into survival gear. The trick is not to copy legacy banking stacks, but to treat on‑chain data as a living system: noisy, probabilistic, constantly forking. A rigid monolith can’t keep up, whereas a modular setup lets you upgrade risk, compliance or pricing logic independently, without freezing the entire product roadmap every time regulation or market structure changes.

Историческая справка: от блок‑эксплорера к модульности

Early crypto analytics were basically fancy explorers: you typed an address, got a list of transactions, did the rest in your head. Then came forensic dashboards, KYC/AML vendors and “crypto compliance and analytics software for firms” that mirrored traditional regtech: big UI, fixed rules, slow release cycles. The problem surfaced quickly: a DeFi exploit or a new privacy protocol appears overnight, while vendor roadmaps move in quarters. That gap pushed larger players to build custom modular blockchain analytics platform architectures in‑house: separate data ingestion, classification, scoring and reporting into loosely coupled services, each owned by a small, fast team, instead of one huge vendor‑locked contract.

Базовые принципы модульной платформы

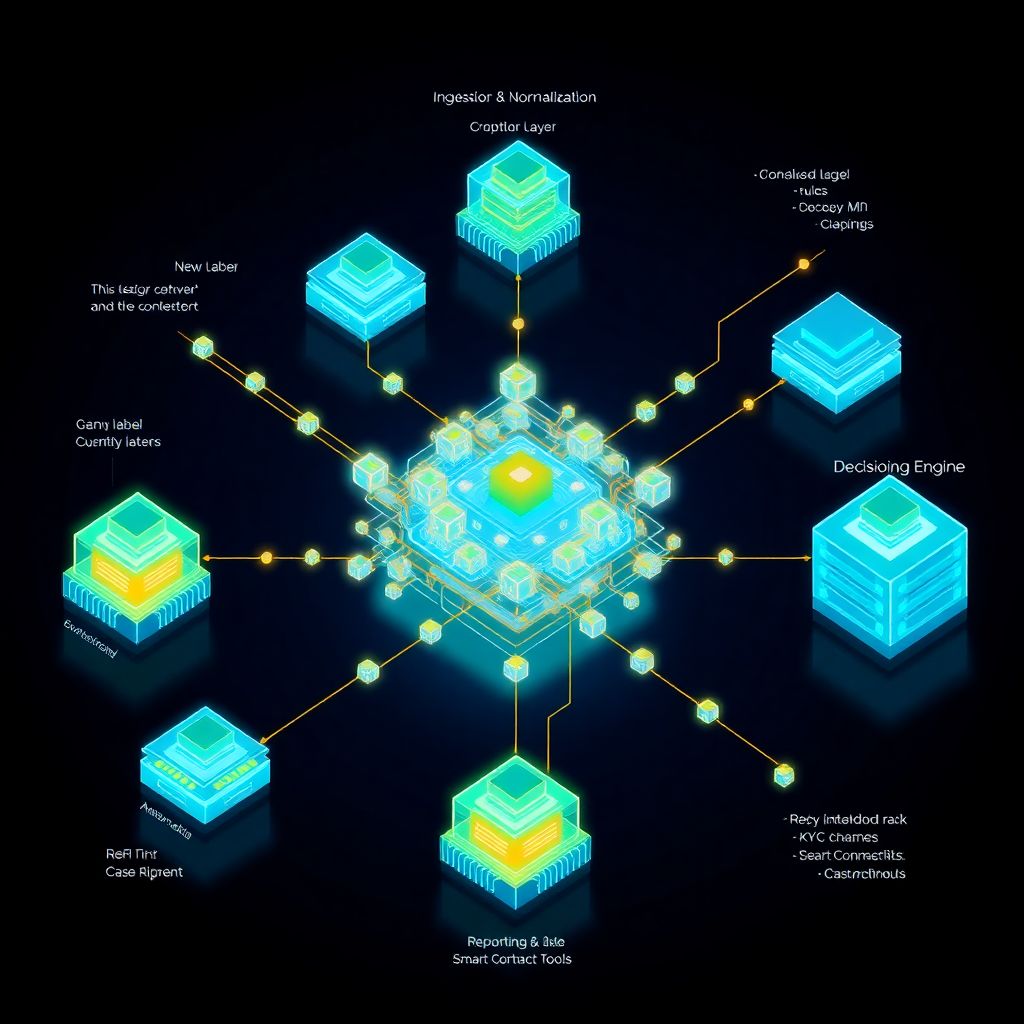

At the core, a crypto risk management and transaction monitoring platform should act like a set of Lego blocks, not like a concrete slab. Data ingestion, entity resolution, risk scoring, reporting and investigator tools must be separate, replaceable layers. Think of every module as an “experiment slot”: you can swap clustering algorithms, change sanction lists, or test a new anomaly‑detection model without touching the rest. The key principle: standard contracts between modules (well‑designed schemas, stable APIs, clear SLAs), so teams iterate inside their box but never break others. This keeps compliance officers, quants and engineers moving at different speeds without constant coordination hell.

Критические модули, без которых платформа не взлетит

A practical way to design the best blockchain analytics tools for crypto businesses is to start from questions, not from databases. “Who is behind this flow?”, “Is this pattern normal for this customer?”, “What do I tell the regulator?” Map each question to a module. Typically you end up with: ingestion from nodes and third‑party APIs; address and entity labeling; risk engines; case management; reporting. Then you add “innovation modules”: graph‑ML experimentation, alternative heuristics, privacy‑preserving analytics. The beauty of modularity is that vendors become pluggable components, not foundations: you can mix open‑source tooling, commercial APIs and your own models behind a single unified interface.

- Ingestion & normalization: full nodes, indexers, mempool listeners, plus CeFi/DeFi integrations.

- Knowledge layer: clustering, labels, off‑chain intel, watchlists, internal allow/deny lists.

- Decisioning: rules, ML, scenario engines, alerting, case routing and feedback loops.

Нестандартные архитектурные решения

One unconventional idea is to treat your analytics stack like a “simulator first” system. Before any rule goes live, you replay historical chains as if you were a time‑travelling regulator, measuring false positives, missed scams and operational cost. For that you maintain a shadow copy of your crypto compliance and analytics software for firms: same modules, but fed only with historical data and experimental logic. Another non‑obvious move: design a “bring‑your‑own‑model” interface so external research teams, universities or even selected clients can deploy detection logic as sandboxed functions. They get controlled access to de‑identified data; you get a continuous stream of fresh ideas and niche detectors.

Event‑driven, not dashboard‑driven

Most teams obsess over dashboards; a modular platform should obsess over events. Every meaningful state change—new label, risk score update, off‑chain KYC change, new smart‑contract risk—emits an event into a central bus. Downstream modules subscribe: monitoring raises alerts, reporting updates exposures, pricing recalculates discounts. This lets you “program” business behavior via subscriptions rather than endless if‑else spaghetti. It also scales nicely across chains and business lines: when you integrate a new L2 or a novel protocol, you just add new event producers; the consumers don’t really care where the data came from, as long as the contract of the event stays stable and well documented.

- Use an event bus as the backbone; keep storage and computation at the edges.

- Prefer idempotent processors so you can safely reprocess chains as labels improve.

- Expose internal events (carefully sanitized) to clients as a value‑add data product.

Примеры реализации: от стартапа до крупного предприятия

Imagine a mid‑size exchange that wants to build custom modular blockchain analytics platform capabilities without turning into a data company. They start with managed node providers plus an open‑source indexer, wrap them into a unified ingestion service, then plug in two different risk engines: one vendor‑based for sanctions and known bad actors, one in‑house for behavior‑based scoring. Case management is another module, connected by webhooks and APIs, not hard‑coded SDKs. Over time they swap vendors, experiment with new graph algorithms for mixer detection, and add support for NFT and DeFi positions—without rewriting or even pausing the rest of their infrastructure.

Крупный игрок с множеством юрисдикций

Now take a multinational custodian. They need one modular crypto analytics platform for enterprises that respects wildly different regulations. Instead of hard‑coding rules per country, they maintain a policy engine module: regulations, internal risk appetite and product constraints become configuration, versioned like code. Each jurisdiction loads its policy bundle; the analytics output is the same, but decisions differ: alert thresholds, escalation steps, reporting templates. When a regulator updates guidance, you update configuration, not the platform. This “policy as data” mindset keeps engineering focused on accuracy and performance, while legal and compliance teams safely own the logic that regulators actually care about.

Частые заблуждения при построении платформы

Первое заблуждение — верить, что покупка готового продукта решит всё. Off‑the‑shelf crypto risk management and transaction monitoring platform solutions закрывают базовые кейсы, но быстро становятся узким горлышком: вы начинаете менять бизнес‑процессы под продукт, а не наоборот. Второе — недооценка стоимости плохих данных: если вы экономите на нормализации и дублирующемся хранении, любые сложные модели превращаются в дорогую иллюзию. Третье — “ML спасёт всех”: без чёткой обратной связи от аналитиков и без экспериментального контура даже лучшие модели со временем деградируют, а команда засыпает в алертах, не доверяя системе в критические моменты.

Миф о том, что модульность — это медленно и дорого

Многие боятся, что модульный подход затормозит запуск продукта. На практике всё наоборот: минимально жизнеспособная платформа — это всего несколько хорошо спроектированных модулей и чёткий событийный контур. Начните с ограниченного набора активов, базовой категоризации адресов и простых правил, но сразу обеспечьте возможность “отстыковки” каждого компонента. Такая архитектура окупается при первом же крупном регуляторном изменении или масштабном инциденте: вы добавляете временный детектор, отдельный хранилище инцидента, отдельную отчётность — и убираете их через месяц, не ломая ядро. Гибкость здесь — не красивая теория, а способ сэкономить месяцы и миллионы.