Why reproducible crypto backtests are harder than they look

Most people think reproducibility is about “saving the script and running it again later”. In crypto, that’s barely the first layer. Prices get revised, exchanges disappear, funding rates are recalculated, and candles are resampled differently between providers. Two runs of the “same” strategy can diverge by tens of percent just because an exchange changed how it rounds trades. So when you talk about best practices for reproducible backtests in crypto, you’re really talking about building a mini-scientific workflow, where data, parameters, environment and even code versions are pinned down and easy to reconstruct months later with minimal friction and zero guesswork.

At a high level, the game plan is simple: freeze everything that matters, label it clearly, and make it dirt-cheap to rerun. Let’s walk through that step by step, from data and randomness to infra and documentation, mixing standard advice with a few non-obvious tricks that save you a lot of pain once strategies get complex or capital gets real.

—

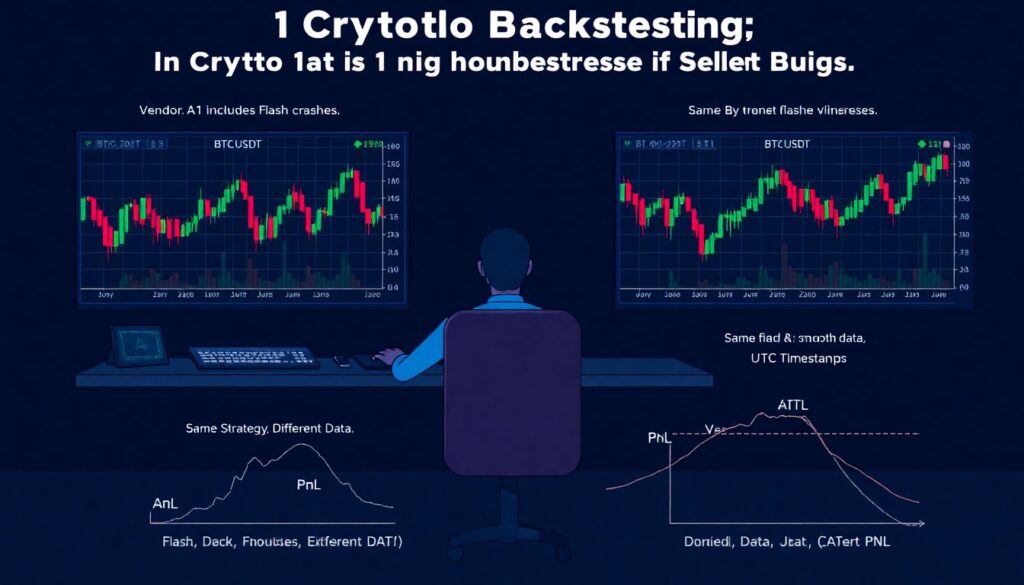

Step 1. Lock down your data like it’s code

For crypto backtesting, data is the main source of silent bugs. Two runs of the same strategy using “BTC-USDT 1m candles from 2021” can give different PnL just because one vendor includes wicks from flash crashes and another smooths them out, or one shifts timestamps to UTC while another uses exchange-local time. To make your results reproducible, you want your data to be versioned, checksummed and described. That means you can say: “Backtest X used dataset Y with hash Z,” and anyone can re-download the exact bytes you used and rebuild the same time series, rather than trying to guess from vague descriptions or ambiguous filenames that don’t preserve critical details.

In practice, treat historical data like source code. Put raw CSV/Parquet files in a dedicated storage bucket or repository, generate hashes (e.g., SHA-256) for each file, and keep those hashes committed alongside your strategy code. When you re-run a backtest, verify hashes first; if something changed, abort and complain loudly. It’s also worth snapshotting data at the vendor level: if your crypto backtesting software pulls candles via API, create a batch job to freeze and store a daily snapshot so you’re never forced to rely on today’s notion of “what happened last year” as defined by a provider that quietly fixed its database.

—

Step 2. Make randomness behave itself

Any Monte Carlo, bootstrapping, order-matching randomness, or even random train/test splits can wreck reproducibility if you don’t control it. You might think a single global random seed is enough, but as soon as you refactor code or change the number of assets, your random sequence shifts and results no longer match. That’s particularly brutal with automated crypto trading backtest tools that parallelize runs automatically and reshuffle execution order behind your back to squeeze out performance.

A better pattern: seed deterministically per object, not globally. For example, seed by (strategy_id, symbol, run_id) and use separate RNG streams for simulation, data augmentation and hyperparameter search. Log those seeds into your run metadata so you can rehydrate the exact same pseudo-random path. If you’re using a python library for crypto backtesting, wrap its RNG entry points in a small utility that always takes an explicit seed argument instead of reading some hidden global state, so refactors don’t silently alter your distribution of fills, slippage or noise.

—

Step 3. Define your backtest as data, not as a story

Humans describe backtests with stories: “I ran the breakout strategy on BTC and ETH from 2020–2023 with 0.1% fees and 5x leverage.” Machines need something more precise. For reproducibility, define every backtest as a single configuration object: what assets, what timeframe, what timeframe resolution, which fee model, which latency model, which risk limits, which environment variables, plus the commit hash of the strategy code itself. Think of this as a “backtest manifest” that fully specifies the experiment in a way that is unambiguous.

Use a structured format like YAML or JSON and check those manifests into your repo. When you run a backtest, you pass exactly one manifest file to your engine. The engine forbids runtime overrides unless they’re explicitly logged. That’s how the best crypto backtesting platforms preserve consistency: a dashboard run is effectively “just” a nicer UI around a machine-readable specification. This eliminates the classic “I changed something in my local environment but forgot which flag it was” issue that ruins reproducibility without obvious errors or stack traces.

—

Step 4. Freeze the environment, not just the code

Even if you pin library versions in requirements.txt, that’s not fully deterministic. System libraries, OS-level differences and even the exact Python interpreter build can change behavior in floating-point math or optimization routines. For serious work, you want the environment to be containerized and versioned. In crypto, where performance tweaks matter, slight changes in numerical libs can alter how a fast path rounds PnL or handles underflows, leading to tiny differences that accumulate.

Use Docker (or a similar container stack) and build images with explicit versions of Python, libraries, and OS base images. Tag images with both a human label (e.g., backtest-v1.4) and a content hash. Your backtest run manifest should reference the exact image tag. That way, “re-run backtest” boils down to: pull the same container, pull the same data, run the same code commit with the same manifest. If you can, make your CI/CD pipeline build and push the image automatically each time core strategy code changes, so humans never have to guess which environment they used last quarter.

—

Step 5. Put infra drift on a short leash

Self-hosted setups drift over time: GPU drivers, OS upgrades, cron jobs, system time configs. Add in exchange-specific APIs and rate limits, and you can end up with test runs that look similar but behave differently. One underrated tactic is to treat your infrastructure as code as well: Terraform, Ansible or similar tools to define the environment where backtests are executed, then lock and version those definitions. That makes “spin up the same cluster again” a single command instead of a weekend project.

If you don’t want to manage all of that, leaning on a cloud-based crypto backtesting service can actually improve reproducibility, not just convenience. As long as they version their environments and let you pin a specific runtime, you offload hardware quirks and OS issues. The key is to ensure you can export their environment ID into your run manifest, and ideally also export your runs as code or config files rather than only through a UI, otherwise you’re just outsourcing the same ambiguity to a third party.

—

Step 6. Build an audit trail for every run

A reproducible backtest is not just “can I re-run it?” but also “can I explain what happened?”. You want a machine-readable log of metadata: start time, end time, manifest file used, git commit, Docker image, data hashes, random seeds, number of trades, and key metrics (like Sharpe and max drawdown) plus warnings and exceptions. A tiny SQLite or lightweight time-series DB works fine for this; the point is persistence and queryability, not fancy dashboards.

Whenever you run a backtest, it registers itself in this run registry. If you later see a suspiciously good result, you can look up the exact conditions and rerun with one command. Many crypto backtesting software tools give you pretty charts but no deep provenance; they’ll show “backtest #142” without any indication of which dataset version or code commit produced it. If you’re serious about capital allocation, treat any result without a full audit trail as entertainment, not evidence.

—

Step 7. Bake in sanity checks and invariants

Reproducible garbage is still garbage. You want built-in sanity checks so that whenever you re-run a backtest and the result changes, you know whether it’s within expected variance or indicates a real issue. For example, checks like “total traded volume must not exceed N times the exchange’s real historical volume” or “cash balance must never drop below -margin_limit – epsilon” act as invariants. If they break, your backtest is invalid regardless of how easily you can reproduce it.

Go one step further and store summary “fingerprints” per run: a hash of daily PnL, trade count per day, and exposure profiles. On re-run, you recompute those and compare. If your PnL timeline hash differs, you know this isn’t the same run, even if the final CAGR looks similar. This pattern is basically snapshot testing for backtests; it’s a simple but non-trivial way to detect subtle environmental or data changes that might otherwise slip through visual inspection.

—

Step 8. Unconventional tricks that help a lot

There are a few non-obvious habits that massively improve reproducibility. One is to log “raw decisions”: instead of only storing trades, also record each decision event—what signals were observed, what the engine decided (“hold”, “buy”, “reduce size”), and why. This can be as simple as journaling features and chosen action. When you rerun, you can compare decisions event-by-event. If a specific candle now triggers a buy where it didn’t before, you’ve located the divergence point without combing through all results.

Another unconventional move is to maintain a small set of “canonical test days” that you run before any large backtest batch—think of them as unit tests for your engine using live-like historical chaos (crazy wicks, delistings, funding spikes). Pick a few nasty days (e.g., May 19, 2021 crash) and store the exact expected trades and PnL for a simple sanity-check strategy. Every time you upgrade your engine or switch infra, run these canonical tests first. If any output changes, you freeze and investigate before you trust larger, more complex experiments.

—

Step 9. Tooling choices that actually matter

People often ask which crypto backtesting software or frameworks they should pick to get reproducibility “for free”. In reality, no tool saves you from bad discipline, but some tools make good discipline easier. When comparing the best crypto backtesting platforms, prioritize those with explicit run configs, versioned environments, easy export of run metadata, and clean APIs for plugging in your own data layer rather than forcing you into their opaque data store.

If you’re working in Python, choose a python library for crypto backtesting that doesn’t hide randomness, lets you inject your own execution and fee models, and doesn’t do sneaky in-place modifications of your input data. Also look for libraries that support deterministic multi-processing or at least let you control how runs are sharded across workers. If your engine silently reorders tasks for speed, you should still be able to tie each run to a fixed manifest and a predictable RNG seed scheme that doesn’t change as you add more assets or strategies.

—

Step 10. Habits for beginners that pay off quickly

If you’re just starting with backtesting in crypto, it’s tempting to chase fancy features: 50 indicators, genetic optimization, flashy dashboards. Instead, focus first on three simple habits: (1) always run from a config file, not ad-hoc arguments; (2) always commit your code before important backtests; (3) always log run metadata in a place that isn’t your memory. These cost almost nothing and make it possible to grow from a single script on a laptop to a team-level research pipeline without rewriting everything from scratch.

For automation, even simple shell scripts that wrap your chosen automated crypto trading backtest tools with standardized flags and logging can act as a reproducibility guardrail. As your setup matures, you can move that workflow into CI pipelines, attach containers, add the run registry and integrate with whichever cloud or on-prem infra you prefer. The point isn’t to copy some idealized “big firm” setup; it’s to steadily move from “I think I ran this yesterday” to “I know exactly what I ran, and I can prove it any time I need” with as little friction as possible.

—

Final thoughts: treat backtests like experiments, not marketing

The crypto space is full of glossy equity curves produced in beautiful UIs. Without reproducibility, they’re mostly theater. When you apply the practices above—data versioning, environment pinning, manifests, run registries, deterministic randomness and sanity checks—you turn those curves into something closer to scientific evidence. You can still be wrong about your assumptions, but at least you’re wrong in a way that can be diagnosed, replayed and improved, rather than lost in the fog of changing APIs, drifting infra and forgotten flags. That’s the kind of foundation you want before you let real capital follow your code.