Why crypto data normalization became a thing

By 2025, nobody is surprised that the same Bitcoin trade can have three different prices depending on the exchange, timestamp format and quote currency. Early crypto desks in 2015–2017 tried to glue CSV exports together in Excel; later everyone rushed to build home‑grown scripts pulling from every cryptocurrency market data api for analytics they could find. The result was predictable: messy schemas, duplicated logic, and dashboards that changed their numbers every time a new exchange was added. Normalization pipelines emerged not from academic purity but from sheer operational pain: auditors, risk teams and quants needed one consistent version of “what actually traded and when”.

What “normalization” really means in crypto

Behind the buzzwords, a crypto normalization pipeline does three boring but crucial things: it standardizes identifiers, unifies time and price conventions, and reconciles anomalies. Tokens get mapped to canonical symbols, even when exchanges invent creative tickers. Prices and volumes are brought to a common quote and unit, with fees and funding costs adjusted consistently. Timestamps are snapped to a uniform time zone and precision. Finally, outliers, gaps and forks are tagged with metadata instead of silently ignored. This is what separates ad‑hoc scripts from serious enterprise crypto data management solutions that survive audits and model reviews.

Approach #1: DIY scripts and open‑source stacks

The most intuitive approach is still “grab Python and start coding”. Teams wire together open‑source collectors, message queues and transformation jobs, building a custom real time crypto data integration platform from Kafka, Airflow and a few internal libraries. The upside is flexibility: you control the schema, can tweak every mapping rule and avoid vendor lock‑in. For research‑heavy funds or niche derivatives venues this is attractive. The downside arrives with scale: maintaining dozens of exchange connectors, monitoring latency, and patching edge cases across chains eats into engineering time that could fuel new trading strategies instead of endless plumbing.

Approach #2: Managed crypto data pipeline services

As volumes exploded after 2020, managed crypto data pipeline services started filling the gap. They collect raw trades, order books and on‑chain events, normalize the basics and expose the result over APIs, streams or cloud storage. For many firms, this buys a few years of speed: you can plug normalized feeds into risk systems, backtesting tools and internal dashboards without hiring a large infra team. The catch is that generic schemas rarely match every internal quirk: custom funding logic, proprietary instrument groupings or exotic options may still need a secondary transformation layer. You trade some control for faster onboarding and predictable SLAs.

Approach #3: Hybrid pipelines with consulting support

A growing pattern in 2025 is the hybrid model: firms bring in crypto data engineering consulting to design the target schema, quality rules and governance, then stitch together both vendor feeds and in‑house logic. Normalized vendor data covers spot and futures basics, while specialized modules handle complex per‑venue details, OTC flows or private market structures. This approach spreads risk: you are not locked into a single provider, yet you also avoid reinventing the wheel for common entities like assets, venues and corporate actions. The main challenge becomes organizational, not technical: someone has to own the canonical definitions and arbitration rules.

Key trade‑offs: latency, coverage, governance

Every normalization strategy balances three axes. First, latency: HFT shops need millisecond‑level pipelines and will often normalize only minimal fields inline, deferring heavy enrichment to slower paths. Second, coverage: cross‑venue arbitrage and DeFi strategies require a broad span of CEX, DEX and on‑chain data, which stresses schemas designed only around traditional order books. Third, governance: as soon as risk, finance and quant teams all rely on the same numbers, disputes about symbol mappings or funding calculations become political. The more stakeholders you have, the more important is a transparent rulebook and reproducible transformations.

Technologies: pros and cons in practice

Batch‑oriented tools like Airflow and cloud warehouses work well for T+1 reporting, but strain under streaming workloads. Stream processors and event buses shine for tick‑level updates, yet debugging stateful joins across thousands of pairs can be painful. Vendor‑hosted real time crypto data integration platform offerings remove some of this complexity, but you inherit their design choices around partitioning and retention. Columnar analytics engines speed up backtests, while time‑series stores handle high‑frequency ticks more gracefully. No single technology wins outright; the pragmatic move is polyglot: match tooling to latency tiers instead of forcing everything into one database or framework.

Practical recommendations for 2025

Design principles before tools

Before picking any stack, write down rules for identifiers, time, and currency normalization that would still make sense if you doubled venues and assets. Decide how to treat delistings, token migrations, and chain forks. Document what “official close” means per venue. This lightweight data contract will outlive your first pipeline and makes later vendor changes survivable. Even crypto data pipeline services adapt more smoothly when they plug into a clearly defined target schema instead of a tangle of one‑off queries and hardcoded field names scattered across dashboards and notebooks.

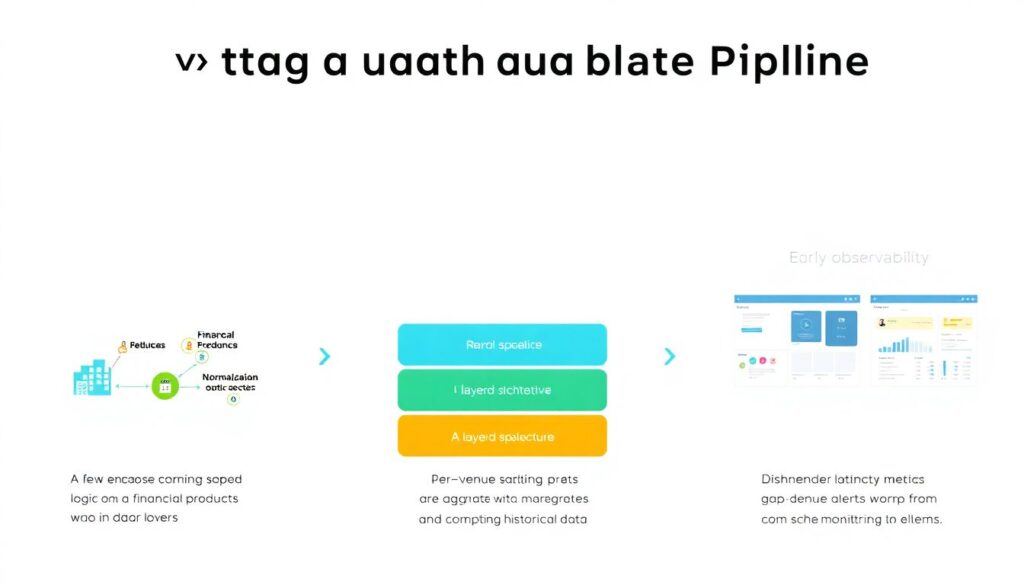

Concrete steps to build a robust pipeline

1. Start with a narrow slice: a few key exchanges and products, but implement the full normalization logic and quality checks.

2. Separate raw, normalized and aggregated layers so bad mappings do not corrupt your history.

3. Introduce observability early: per‑venue latency, gap detection and schema drift alerts.

4. Use a reference asset master and venue registry as the backbone of your joins.

5. Periodically backfill history using a stable cryptocurrency market data api for analytics to validate your transformations and detect silent logic regressions.

Historical context: from chaos to governance

In the ICO boom, data was mostly an afterthought; traders were happy if APIs didn’t crash during volatility. Around 2018–2019, as institutions tip‑toed in, spreadsheets turned into warehouses and ad‑hoc scripts into scheduled ETL. DeFi summers from 2020 onward blew up complexity again: on‑chain events, liquidity pools and NFTs did not fit neatly into exchange‑centric schemas. By 2023, regulators and auditors started asking hard questions about data lineage and reproducibility. This pushed serious desks toward formal data governance, lineage tools and more rigorous enterprise crypto data management solutions that mirror what exists in traditional finance.

Current trends and what’s next

By 2025, the hottest topic is unifying centralized and decentralized sources in a single logical model. Firms want a view where CEX order books, DEX pools and on‑chain lending positions all normalize to comparable notions of liquidity and risk. Another visible trend is schema‑as‑code: normalization rules versioned alongside application code, with tests and automated rollbacks. Vendors offering crypto data engineering consulting increasingly bundle reference schemas and governance playbooks instead of only raw feeds. The direction is clear: less ad‑hoc mapping in spreadsheets, more opinionated platforms and shared best practices around what “good” normalized crypto data looks like.