Seeing the crypto data lake as a product, not a pet project

Why a crypto data lake is worth it, even on a tight budget

If you hack together scripts every time you need prices or on-chain metrics, you’re basically burning time as if it were free hardware. A crypto data lake gives you one consistent place for raw trades, order books, DeFi events and wallet flows, so you stop re-downloading and re-cleaning the same stuff. Even budget-friendly crypto data lake solutions can turn a pile of CSVs and APIs into a coherent asset that outlives any single strategy, dashboard or bot you’re experimenting with.

Picking an architecture that won’t bankrupt you

Local stack vs cloud storage vs managed services

On a budget, your first big decision is where the lake lives. A local stack (PostgreSQL, DuckDB, Parquet files on a cheap VPS) is dirt cheap and fast, but you become the SRE and DBA in one person. A pure cloud bucket plus serverless SQL is flexible, yet easy to overuse if you don’t cap scans. A managed crypto data warehouse service looks pricey at first glance, but can be cheaper long-term once you factor in backups, monitoring and the hidden cost of your own time.

APIs, full-node indexing or hybrids: what to ingest

You can either lean on a cheap crypto market data api, run your own full nodes, or mix both. API-first is quick: you pay per call or per month, no node maintenance, perfect for prices and volumes. Node-first gives you full control and every on-chain event but eats RAM, disk and ops time. A hybrid setup usually wins for small teams: APIs for market data, lightweight archive nodes or third-party indexers for deeper logs, then normalize everything into Parquet before loading it into the data lake.

Learning from people who did it with limited cash

Inspiring lightweight builds that scaled later

One indie quant started with nothing more than a Raspberry Pi, DuckDB and an affordable cryptocurrency historical data provider. He stored candles and funding rates as compressed Parquet, versioned them with Git LFS, and queried locally. Only after his backtests became CPU-bound did he move to a small cloud instance. Another example is a two-person analytics studio that began with BigQuery’s free tier, strict partitioning by day and asset, and alerting on query costs so experiments never turned into runaway invoices.

Cases of successful projects and their trade-offs

A DAO analytics guild built a lake purely on S3 plus open-source Spark. Storage was cheap, but contributors struggled with cluster configs and flaky jobs; more time went into infra than models. Contrast that with a small trading desk that picked a mid-tier managed warehouse and a curated crypto data warehouse service. Their bill was higher per gigabyte, yet analysts could run SQL directly and deliver signals faster. The lesson: “cheaper per TB” is not always cheaper than “fewer hours burned on maintenance.”

Designing a maintainable data lake from day one

Minimal schema, maximum clarity

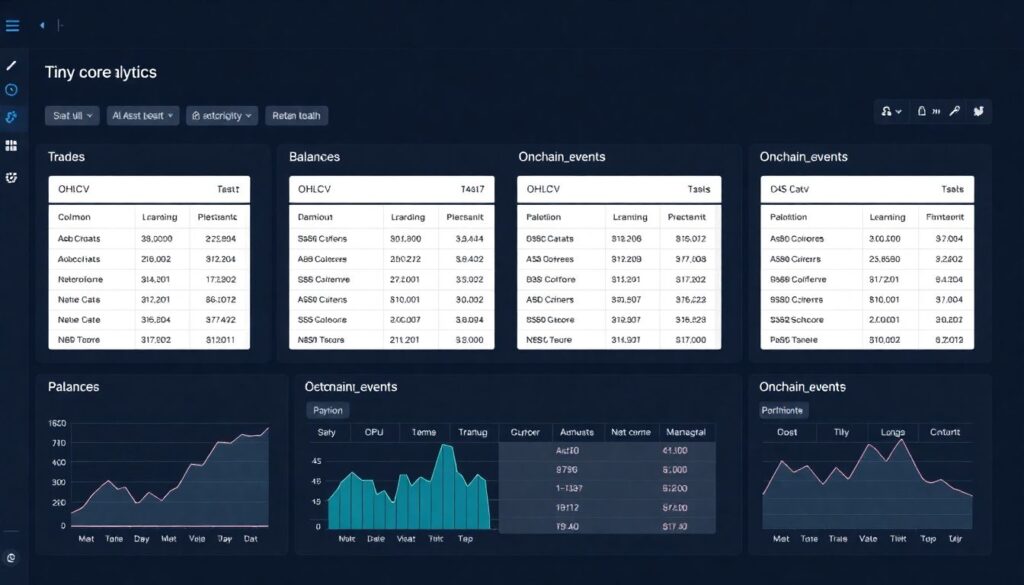

You don’t need enterprise-grade modeling, but you do need consistency. Start with a tiny core schema: trades, ohlcv, balances, onchain_events. Add strict column names, types, and time zones, and partition by date plus asset or chain. Even if you later migrate to the best crypto analytics platform for developers, you can just copy the underlying Parquet or CSV structure. Good naming plus predictable partitions will save you from expensive full-table scans and painful refactors when the dataset grows.

Practical maintenance routine that fits side-project hours

Think of maintenance as a small daily workout, not a weekend crisis. A simple schedule might be: 1) Nightly ingestion jobs that pull from APIs or nodes into staging. 2) A validation step that checks row counts, timestamps and symbol mappings. 3) A transform stage that writes clean Parquet to your main bucket. 4) Weekly pruning of old staging data and logs. Automate alerts on failures and cost spikes, so your side project doesn’t silently die or suddenly generate a surprise bill.

Growing your skills and tooling without overspending

Resources for learning and experimentation

To level up without paying for bootcamps, combine free cloud credits, open-source tools and solid educational material. Focus on SQL fundamentals, columnar storage principles and streaming basics before chasing exotic frameworks. Many blogs and courses walk through building DIY crypto data lake solutions with S3-compatible storage, open-source orchestrators and serverless compute. Use public blockchain datasets, community-run archives and sandbox tiers from providers to practice constructing pipelines, designing schemas and benchmarking query patterns systematically.

Choosing external services wisely

When you start paying for components, be deliberate. Compare vendors not only on headline price, but on data coverage, latency, and lock-in. For raw feeds, test more than one affordable cryptocurrency historical data provider before committing. For analytics, prototype on the free tier of what looks like the best crypto analytics platform for developers, then track real-world query patterns and costs. If you later switch to a different crypto data warehouse service, portable file formats and clear schemas will make the migration far less painful.