Why checkpointing matters in messy crypto research

Crypto research workflows are rarely linear. You scrape historical on-chain data, normalize it, run a battery of indicators, maybe train a model, then tweak one tiny parameter and everything diverges. Without explicit checkpointing you end up with notebooks named “final_v7_really_final.ipynb” and no reliable way to reproduce a promising backtest from two weeks ago. Checkpointing is the habit of freezing intermediate states — datasets, feature matrices, model weights, parameter configs, even environment versions — so you can roll back, branch experiments and verify results. In a domain where tiny off‑by‑one timestamp errors or slightly different exchange candles can flip a strategy from profitable to catastrophic, reproducible research is not academic hygiene, it is a risk‑management tool.

Principles of a reproducible blockchain research framework

Before picking tools, it helps to define what a reproducible blockchain research framework actually guarantees. At minimum, given a commit hash, a run ID or a tag, anyone on your team should be able to reconstruct: the exact data slice used, the transformations applied, all hyperparameters, random seeds, library versions and the resulting metrics and artifacts. If any of these pieces is missing, reproducibility degrades into guesswork. In practice this means you treat every research run like a deployable software artifact: it is versioned, immutable and described by machine‑readable metadata, not just comments in a notebook. When this discipline is in place, adding checkpointing is just a question of deciding where and how often to persist state.

What exactly should you checkpoint?

In crypto analytics people tend to either checkpoint nothing or checkpoint everything as raw dumps. Both extremes are painful. The pragmatic approach is to checkpoint semantically meaningful stages. Typically this includes: raw data snapshots straight from node or API; cleaned, deduplicated and time‑aligned datasets; engineered features aligned to your prediction or signal horizon; model or strategy configs plus weights; and final evaluation reports. Each of these is expensive to recompute yet relatively compact to store if you use compressed columnar formats. By attaching stable identifiers to each stage, you can reconstruct a research run by wiring together the right checkpoints, without re‑scraping or re‑training every time.

Designing checkpoints for blockchain data pipelines

On‑chain data is append‑only but your derived views are not. Indexing, decoding events, merging with off‑chain market data and repairing missing candles can change results dramatically. To keep this under control, you want your blockchain data analysis platform with checkpointing to treat the raw chain state as immutable and version everything you build on top. A simple pattern is to anchor checkpoints to block heights or timestamps. When you snapshot “raw_trades_2024‑09‑01_UTC”, you store not just events but also the RPC endpoint, node version and any query filters. Later, when you enrich with labels or liquidity metrics, you checkpoint again with a new logical name while preserving a pointer to the original raw snapshot as lineage metadata.

File formats and storage for durable checkpoints

Storing gigabytes of CSVs on a laptop is not a strategy. For scalable crypto research, use columnar formats like Parquet or Feather, which compress well and preserve schema. Combine this with object storage (S3, GCS, self‑hosted MinIO) and a strict directory convention, for example: project/dataset/version/run_id/. Each dataset version is immutable; when you change preprocessing logic you create a new version instead of overwriting. Add a small JSON or YAML manifest per checkpoint that records schema hashes, row counts, hash of the underlying query and upstream checkpoint IDs. With this structure, rolling back means updating a pointer in your config, not reverse‑engineering which of ten files is the good one.

Checkpointing in backtesting and strategy research

Backtests are extremely sensitive to data windows, fee assumptions and execution models. The best crypto backtesting software for research won’t save you if you can’t reconstruct which config produced which equity curve. Treat every backtest as a deterministic function of: data slice ID, strategy code commit, parameter vector, simulation options and random seed. Your checkpoint should store all of that plus summary metrics and key series like PnL, drawdown and exposure. When you discover a bug in your slippage model, you can re‑run only the affected parameter grid while preserving old results for comparison, because each run has an explicit reproducible footprint instead of a vague description in a spreadsheet.

Lightweight run tracking that actually gets used

Heavy MLOps stacks often die because researchers bypass them when they are under time pressure. A practical compromise is to implement minimal run tracking first: a small CLI wrapper that launches your backtest or model script, auto‑generates a run ID, logs git commit, config file hash, environment details and writes everything into a structured “runs” directory. Every artifact — logs, plots, metrics JSON, pickled models — lives under that run. Later you can add a tiny web UI or notebook helper that reads this directory and lets you filter runs by tag or parameter. The key is that run logging is automatic and unobtrusive, so you capture checkpoints even during quick exploratory sessions.

crypto research tools for reproducible workflows

Instead of hunting for a magic all‑in‑one solution, think in terms of composable crypto research tools for reproducible workflows. You typically need four layers: data access, transformation, experimentation and orchestration. At the data layer, prefer client libraries that expose explicit block ranges and deterministic pagination so you can anchor checkpoints cleanly. For transformations, use frameworks that can read and write from your object storage and express dependencies between tasks, making it easier to rebuild only the broken pieces. Experimentation tools should integrate with your version control and allow passing a run ID or tag from the command line. Finally, orchestration glues it together so that a single definition of a pipeline can run locally for quick tests and in the cloud for heavy workloads.

Building automation around checkpoints

Once you have consistent checkpoints, automation becomes straightforward. Use crypto trading research automation tools to trigger downstream jobs when a new checkpoint appears — for example, when fresh hourly candles are ingested and validated, a signal generation job runs automatically against the latest stable dataset. Another pattern is nightly replay: re‑run a curated set of critical backtests against the newest data snapshot and compare metrics to prior checkpoints. If performance drifts beyond a threshold, raise an alert. Because every run is anchored to specific checkpoint IDs, you can inspect whether degradation comes from data changes, code updates or both, instead of guessing from timestamps and file names.

Reproducible environments and dependency snapshots

Data and configs are only half the story; the same code can behave differently across library versions or hardware. To close this gap, treat your environment as part of the checkpoint. Freeze Python dependencies in a lockfile, capture system libraries via containers and pin major external tools like database engines or node clients. For CPU‑bound analytics this might be as simple as a Docker image tag recorded per run. For GPU‑heavy ML research, also pin CUDA and driver versions in metadata. When a run turns out to be important, you can spin up an identical environment from its recorded image and rerun with the same seeds. This approach turns “works on my machine” into a reproducible property instead of a joke.

Practical seeding and randomness control

Many crypto models rely on stochastic optimization, data shuffling or simulation of order book microstructure. Without disciplined seeding, every execution produces a slightly different metric, and debugging becomes guesswork. Decide on a seed strategy: a global experiment seed plus derived seeds for each component, or a fixed per‑run seed stored in the config. Log these seeds in your checkpoint manifest and wire them consistently through NumPy, PyTorch, TensorFlow or any C++ extensions you use. For Monte Carlo style simulators, consider storing not just the seed but the sequence length and scenario definitions, so you can replicate specific anomalous paths that triggered unexpected liquidations or slippage artifacts.

Versioning research logic and configs

Reproducibility breaks fast when you edit notebooks in place. Instead, move core logic into version‑controlled modules and treat notebooks as views. Every experiment should reference an explicit git commit hash, and your run launcher should refuse to execute with dirty uncommitted changes unless you override intentionally. Pair this with structured config files — YAML or JSON — that define strategy parameters, data sources and feature toggles. Check these configs into the repo and embed their hash into the run ID. This combination ensures that two runs with the same code commit and config hash are logically identical; if metrics differ, you know the cause lies in data or environment, not stealth parameter drift.

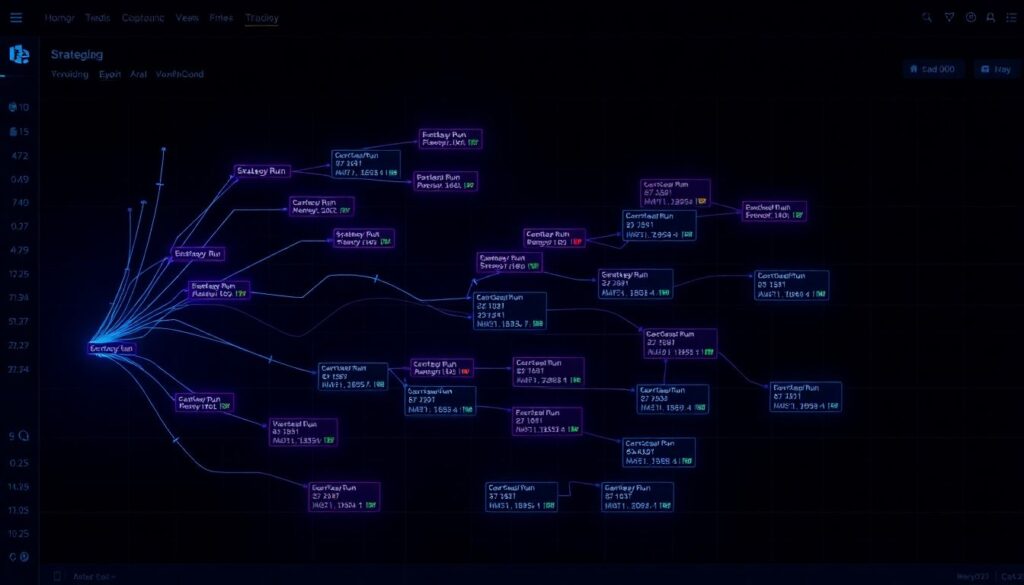

Branching and merging experimental lines

Crypto strategies often evolve in branches: one line tweaks risk rules, another explores alternative entry conditions. With structured checkpoints, you can model these branches explicitly. Create a lineage tree where each run records parents — the checkpoints and configs it was derived from. When a branch proves promising, you can merge its logic back into main, rerun key experiments from that branch’s parental checkpoints and verify that performance holds under the consolidated code. This avoids the common mess where people cherry‑pick “good” runs from forgotten side experiments without understanding which exact conditions made them look so attractive in the first place.

Choosing tools that respect checkpointing

Lots of shiny platforms promise analytics dashboards while hiding away the details that matter. When evaluating a blockchain data analysis platform with checkpointing support, inspect how it handles three things: explicit data versioning, exportability of raw snapshots and transparency of transformations. If the platform only lets you query “current” data without referencing snapshot IDs, you are effectively back to non‑reproducible workflows. Prefer systems where you can script data pulls with fixed snapshot or block‑range identifiers, materialize them into your own storage and use your pipeline stack on top. This might look less convenient than a web UI at first, but it pays off the first time you must re‑justify a result to a skeptical risk committee.

Glue code and duct tape that are actually okay

Many teams hesitate to adopt proper checkpointing because they assume it requires a heavy platform migration. In reality, a small layer of glue code often yields most of the benefits. A few simple components help: a run launcher script, a checkpoint manifest schema, a storage helper for saving and loading by ID and a convention for directory layout. You can bolt this onto existing notebooks and scripts incrementally. The important part is that all new work passes through this thin abstraction. Over time you can replace individual guts — switch schedulers, swap storage backends, introduce new experiment trackers — while keeping the external behavior of your checkpoints intact and your historical lineage unbroken.

Bringing it all together

Checkpointing and reproducibility in crypto research workflows are not optional extras; they are the backbone that allows you to iterate aggressively without losing epistemic control. A well‑designed reproducible blockchain research framework lets you refactor strategies, onboard new team members and defend your results under external scrutiny, all while experimenting at high frequency. Start small: lock your environments, log every run with a unique ID, snapshot key datasets and keep configs versioned. Then layer on automation, lineage tracking and richer manifests. Over a few months the compounding effect is dramatic: less time re‑deriving old insights, more time exploring genuinely new ideas with confidence that, if something works, you can always prove exactly how you got there.